Goodhart’s Law is named for Charles Goodhart, a British economist who in 1975 popularized the idea that

Any observed statistical regularity will tend to collapse once pressure is placed upon it for control purposes.

I think it was meant humorously, but the pungent truth of the statement made it stick.

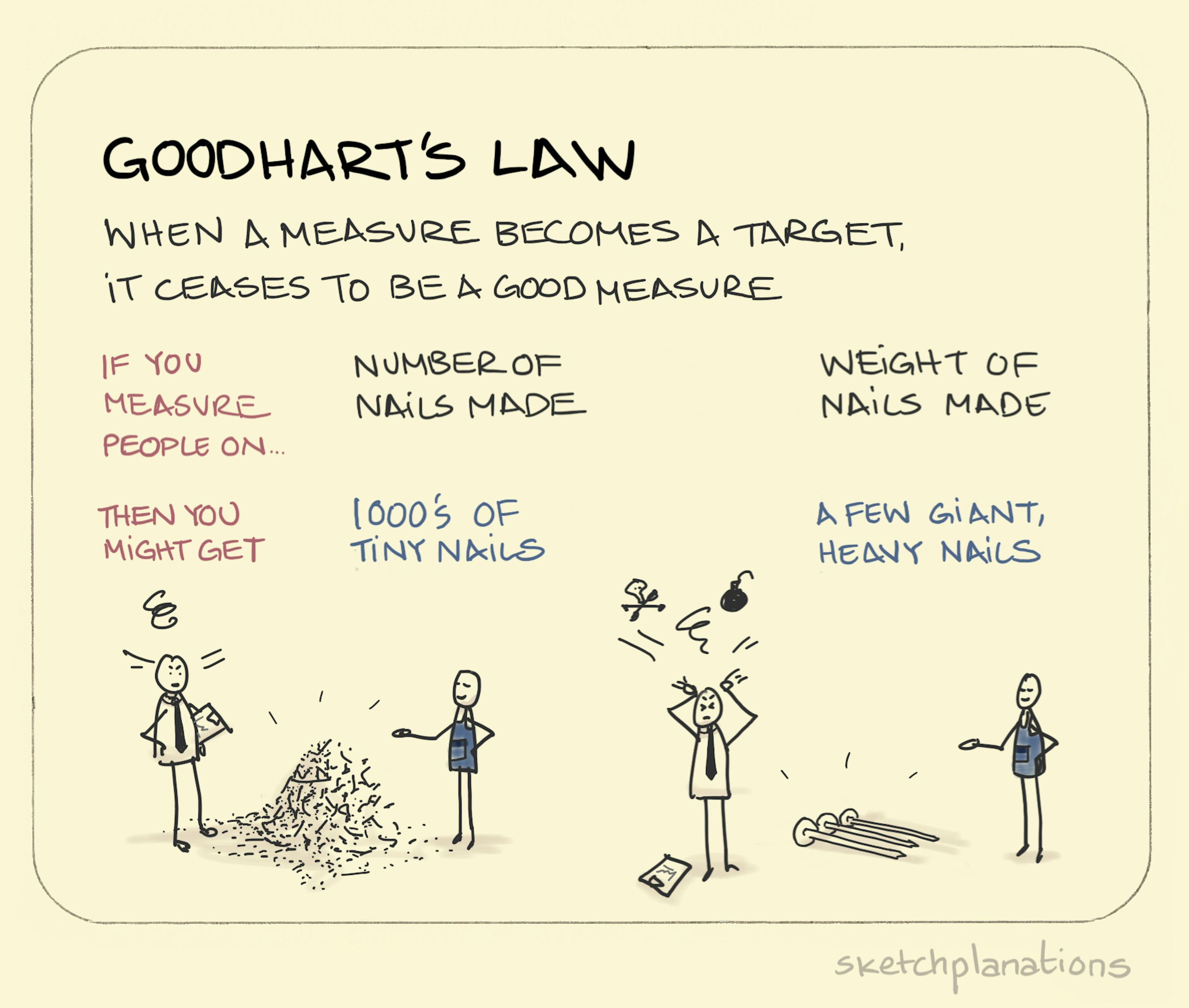

The point is sound. Essentially, any statistic that becomes a target ceases to be a good measurement.

Chasing a statistic causes people and organizations to ignore the unintended or secondary/tertiary/etc. consequences, which may, in fact, MATTER A WHOLE LOT.

Prranshu Yadav has a good post featuring four “profound ways that Goodhart’s Law subtly affects your life.” I suggest reading his sage post.

Goodhart’s Law, and its impacts, can be seen all over the place. If you pay attention to what appears to matter, from a quantifiable standpoint, you will see it in action everywhere.

One universal system that everyone engages at some point in their life is healthcare. Healthcare systems live and die by their metrics. Optimizing against a metric, such as reducing patient readmissions, might end up having unintended consequences. Mayo Clinic defines hospital readmission as patient admission to a hospital within 30 days after being discharged from an earlier hospital stay. The standard benchmark used by the Centers for Medicare & Medicaid Services (CMS) is the 30-day readmission rate.

Of course, we need to reduce readmissions. Makes sense, right?

However, when held accountable for readmissions, hospitals tend to over focus on the measurement at potential expense to other programs and needs. To reduce admissions, a hospital may preventing needed patient readmissions (not admit a patient that needs care) or assign a majority of the doctors to the emergency room at the detriment of other hospital areas in an effort to get the diagnosis correct.

Keep your eyes open for examples of Goodhart’s Law in action. You will be astounded by what you find.